DeepSeek, Where Are You?

If you prefer the video version, check it out here: https://youtu.be/Q3lCM2IoD9g?si=b_mqnHEx3aWpChlN

Remember when DeepSeek was supposed to be China’s ChatGPT killer?

For a moment, it looked like OpenAI had a real competitor—one that popped out of nowhere, sparked global headlines, and even triggered a trillion-dollar selloff in tech stocks.

And then… nothing.

Let’s see how DeepSeek became 2025’s AI version of the Segway: overhyped, geopolitically charged, and ultimately forgotten.

The Beginnings

Let’s rewind to January 2025. DeepSeek’s R1 model launches, claiming OpenAI‑like capabilities—math, reasoning, even coding skills—at a fraction of the cost.

Just weeks later, it’s the number one free app on the U.S. App Store. U.S. hedge funds panic. Nvidia shares tank. Apple and Google get awkward calls from regulators.

It was marketed as a technical miracle. But behind the curtain, DeepSeek was also a geopolitical chess piece—and that’s where things started getting murky.

From Zero to App Store Hero

DeepSeek popped up out of nowhere. Its R1 model used a smart Mix‑of‑Experts architecture, meaning it could scale its intelligence without burning through massive compute.

Instead of tens of billions in training costs, it reportedly cost just five or six million dollars to train.

That’s like building a rocket with soda cans and duct tape—and still making it to orbit!

And it did this using weaker Nvidia chips, the ones not blocked by U.S. export controls. That sent a very loud message:

“We don’t need your fancy H100s.”

The Campaign

The R1 model was efficient and also fast. It was open-source. It was suddenly everywhere.

And the Chinese government? They were loving it.

Media campaigns, influencer videos, and state-affiliated accounts all pushed the same message:

“China is back in the AI race—and ahead.”

It was China’s “Sputnik moment”—only this time, it wasn’t about rockets. It was about reasoning, and perhaps reclaiming technological pride.

The Hype Hits the Fan

But hype doesn’t last. Pretty soon, people started asking questions. Like:

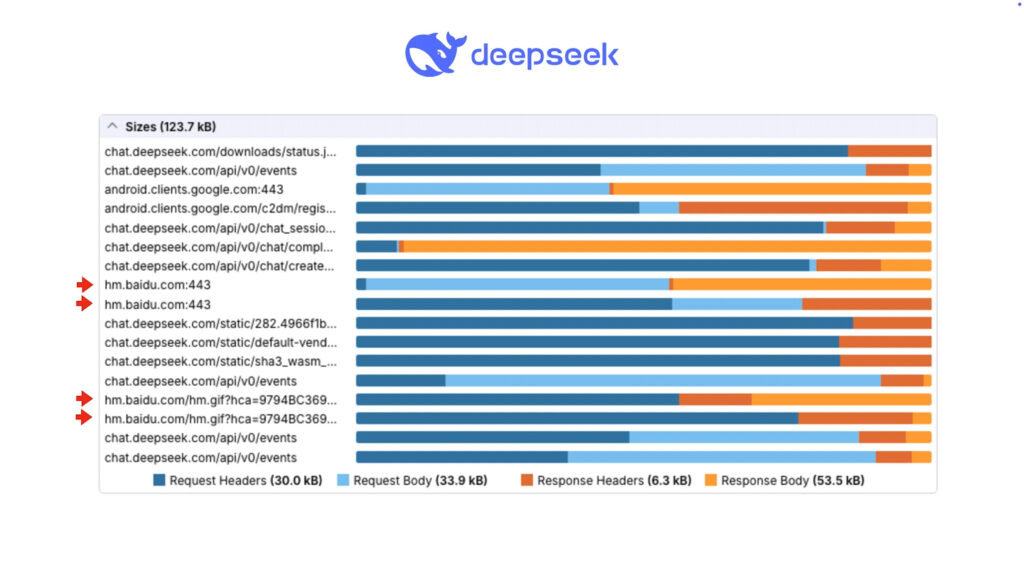

– Why does this app phone home so much?

– Why is it hitting Chinese servers?

– Who exactly is behind DeepSeek?

Turns out, DeepSeek was funded by High-Flyer, a hedge fund.

The devs? Based in Hangzhou.

And as researchers dug deeper, connections to ByteDance infrastructure—and even military-linked research—began to surface.

National Security Concerns

A report from Reuters claimed DeepSeek was aiding China’s military by circumventing chip export controls.

Germany’s privacy watchdog went further—saying DeepSeek was illegal under GDPR, and shouldn’t even be in Apple or Google’s stores.

Then came the bans:

- Australia, South Korea, and Taiwan pulled it from government devices.

- Germany demanded Apple and Google remove it entirely.

- France & Canada launched investigations.

Suddenly, “world-class AI” started looking more like a global security risk.

Questionable User Safety

On January 27, DeepSeek stopped new user registrations after being hit by a massive cyberattack. The attack repeated in late February.

As it turned out, the systems were vulnerable to hacking due to numerous serious security flaws, including unsecured databases left open with no authentication.

Quoting from the article on the Cyber Management Alliance website:

“These unsecured databases contained over one million log entries of DeepSeek’s operations – including users’ chat histories in plaintext, API keys, secret access tokens, backend system details, and other highly sensitive information.” – see https://www.cm-alliance.com/cybersecurity-blog/deepseek-cyber-attack-timeline-impact-and-lessons-learned.

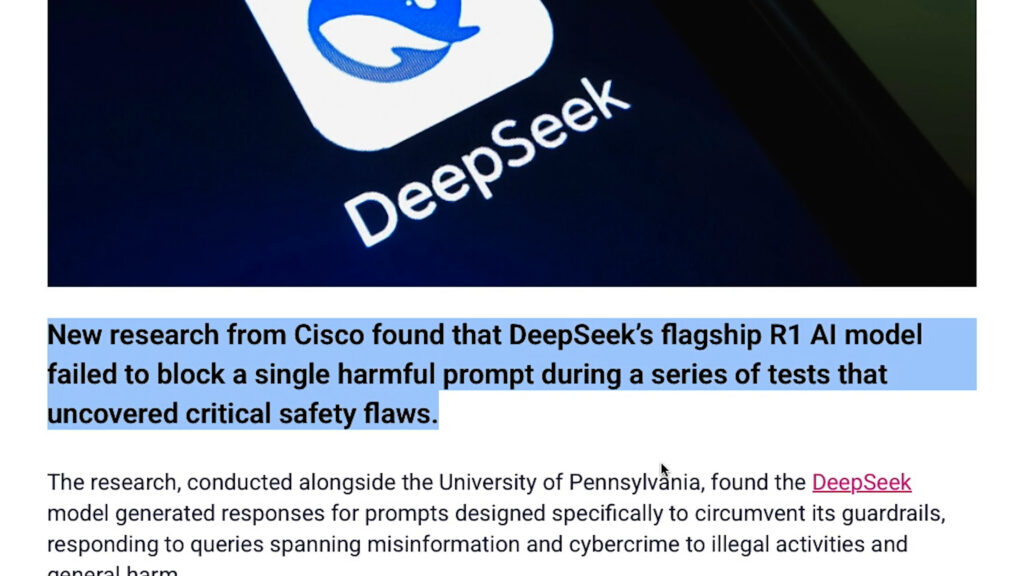

One independent audit tested DeepSeek’s ability to handle harmful prompts (https://blogs.cisco.com/security/evaluating-security-risk-in-deepseek-and-other-frontier-reasoning-models)

The result? A 100% success rate at generating dangerous content.

OpenAI and Anthropic models have layers of safety filters, reward modeling, alignment training. DeepSeek? Not so much*.

(*)Aside from some guardrails that prevent it from responding to content not in line with the views of the Chinese government.

The Vanishing Act

Then, just as quickly as it appeared… it vanished from the headlines.

Why? Well, partly because R2 never arrived.

There were whispers about R2 being “five times more powerful.”

There were countdowns. Promises. Teasers.

And then—nothing.

Why? Because in mid-April, the U.S. closed the export loopholes. So even those weaker Nvidia H20 chips DeepSeek had relied on were blocked.

The US restrictions were lifted later in July—Nvidia can resume the sales of the popular AI chips to China,—but the damage has already been done.

And With R2 stuck in limbo, DeepSeek lost its edge.

Meanwhile, OpenAI rolled out GPT‑4.5. Claude 3 showed up with sky-high benchmarks. Google Gemini started catching up in practical coding tasks.

Suddenly, DeepSeek wasn’t revolutionary anymore. It was… background noise.

The Bigger Lesson

Even Chinese social media, which had once cheered on DeepSeek, moved on.

Some people still use it, sure. But the app is no longer at the center of AI conversations—not in China, and definitely not in the West.

Its few remaining advantages? Open weights. Research papers. A handful of niche developer tools.

But nothing that screams “global disruption” anymore.

So what’s the takeaway here?

First: a single model release doesn’t make you a global AI leader. That takes time, infrastructure, safety layers, ecosystem, and trust.

Second: privacy and data security still matter. You can have a blazing-fast chatbot, but if it leaks data like a sieve and pings servers in questionable locations? Good luck with long-term adoption.

And third—well, here comes the “I told you so” moment:

Don’t type anything into any AI tool—DeepSeek, ChatGPT, Claude, whatever—that you wouldn’t be okay posting publicly.

Because whether it’s logged, cached, leaked, or intercepted, once it’s on someone else’s server, it’s no longer yours to control.

I said it months ago: DeepSeek sends multiple requests to Baidu—China’s version of Google -> https://youtu.be/JNbuCcacXrk?si=w4dvaEhPe8e4bsj5

That alone should’ve been enough to make people pause.

ChatGPT and Claude don’t do that, true. But they do send everything you type to their own servers.

There is no such thing as guaranteed privacy in cloud-based AI. Ever.

Leaks happen. Backdoors happen. And if your prompt is something sensitive—your health records, your passwords, your company secrets?

You’re gambling. Period.

Final Thoughts: Hype Is Not a Strategy

The DeepSeek story is a cautionary tale about how fast tech can move…and how even faster it can be forgotten.

One week you’re the future. The next, you’re flagged for national security risk and can’t even ship your next update.

DeepSeek had its moment. It poked the bear. It freaked out investors. It gave China a temporary boost of AI optimism.

But in the end? It couldn’t outrun the trust gap.

And that’s something you can’t solve with clever architecture or cheap processors.

If you found this breakdown helpful, give it a thumbs up, and maybe share it with someone still installing random AI apps off the App Store.

And if you want to go deeper into AI privacy, check out my video on why sharing secrets with AI is a huge mistake: https://youtu.be/JNbuCcacXrk?si=w4dvaEhPe8e4bsj5

Stay safe, stay smart, and please, stop typing your credit card info into chatbots. 😉

Thanks for reading!

🎓 Check out my courses on:

– Teachable: https://learnwithkarl.teachable.com

– Udemy: https://www.udemy.com/user/karolynyisztor/

– LinkedIn Learning: https://www.linkedin.com/learning/instructors/karoly-nyisztor?u=2125562

– Pluralsight: https://www.pluralsight.com/profile/author/karoly-nyisztor

🌐 Connect with me:

Website: http://www.leakka.com

Twitter: https://twitter.com/knyisztor

Responses